“My favorite party trick?”

A tweet yesterday from Prabhakar Raghavan, “a Senior Vice President at Google”:

My favorite party trick? Showing someone Lens who has never used it before. It usually goes like… 👀🔍🤯🤩😍 Lens is so helpful that people now use it for 12B visual searches a month — a 4x increase in just 2 years

- How do we know that it is “so helpful”?

- Do the metrics suggest that?

- What would “so helpful” mean?

- What constitutes a search?

- Does this include “reformulated searches”—alternate angles? better focus? a live camera scanning across a piece of printed text?

- How should we contextualize 12 billion searches a month?

Lily Ray, “a prominent SEO (search engine optimization) professional”, comments on a surprise that hints at the tool—as situated—not being as helpful as it could be?:

Surprised more people don’t search with Lens yet but I think most people still don’t know how/where to access it. Especially iPhone users

Ray has tweeted something like this before, here in December1:

I strongly believe Google Lens will be the biggest transformation we see in how people use search in the next 2-3 years.

That is, assuming Google figures out how to get the rest of the non-nerd world to know it exists 😅

To the recent comment from Ray, the Google Search Liaison (GSL; for an analysis of the function of this role, see Griffin & Lurie (2022)) engaged, in reply2 and in quote-tweet, here:

How have you used Lens to find something visually?

Replies suggested:

- translation

- identifying clothing in public

- identifying birds, plants, bugs

- learn about a panting

More than a party trick

I have shared about Google Lens as a sort of party trick, but the greatest benefit for me has been how its unclear potential is a great opportunity to then raise questions about what other modalities or purposes of searching we might develop (with of course, risks and benefits to consider).

Here, from a footnote in my dissertation (Griffin, 2022):

While search engines are designed around digitized text, there are other modalities available for search, all materially bound. Voice-based search transformed audio into text. Some people may recognize digital images as search seeds, with reverse image search. Some search engines and other search tools also support searching from a photograph. There is also some support for searching with music or even humming. Chen et al. (2022) demonstrate search queries from electroencephalogram (EEG) signals. As web search expands in these directions it may be necessary pursue new approaches to showing which pictures, sounds, smells, or thoughts might effectively link questions and answers.

Related post: still searching for gorillas

Searches:

- Wikipedia[“Google Lens”]

- Reddit[“Google Lens”]

- Twitter[“Google Lens”]

- SemanticScholar[“Google Lens”]

Compare and contrast with:

- iNaturalist

- Reddit’s r/whatisthisthing, etc.

- reverse image search

- Wikipdia: Jelly (app)

- Google Images: About this image; cc: Mike Caulfield

Footnotes

While search generation has been the excitement of late, I don’t think it unreasonable to imagine that searching from images may have a significant transformative effect in the coming years. You can find additional tweets from Ray via Twitter[from:lilyraynyc (“google lens” OR multisearch)] (N.b. Twitter search functionality increasingly demands the user to manually click or press enter after following search links.)↩︎

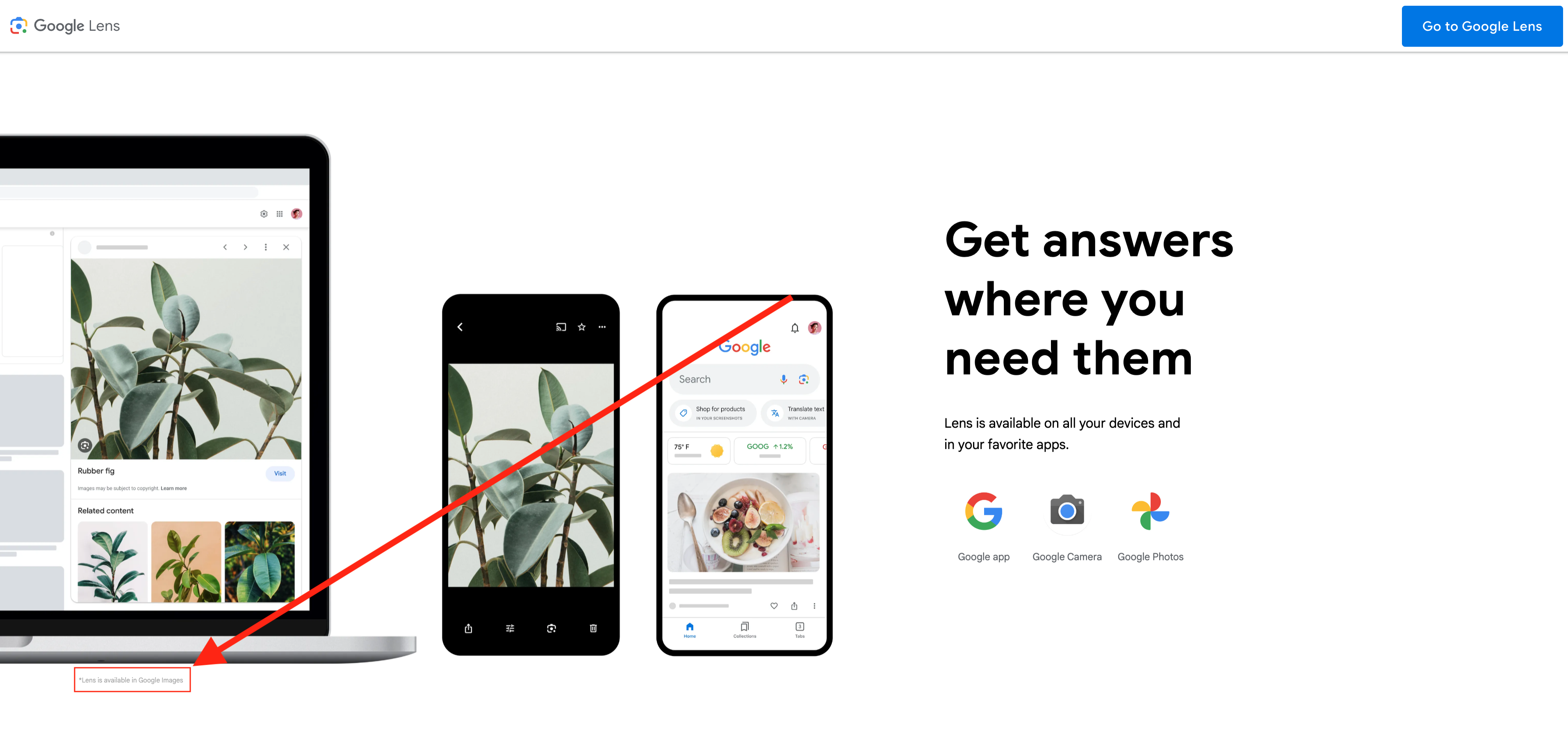

Pertinent to Ray’s comments, the link shared by the GSL—lens.google.com—appears to add friction by encouraging mobile app downloads and does not give direct access to the browser-based interface

If you scroll down you will see small text on the lower left: “*Lens is available in Google Images”.

Image 1. Screenshot from the Google Lens page. In small font below a depiction of an image search on a laptop: ’*Lens is available in Google Images’

Lens is available through the browser via images.google.com (clicking the camera icon will present an interface for inputting an image to “Search any image with Google Lens”). See also Google Search Help > Search with an image on Google.↩︎

References

Chen, X., Ye, Z., Xie, X., Liu, Y., Gao, X., Su, W., Zhu, S., Sun, Y., Zhang, M., & Ma, S. (2022). Web search via an efficient and effective brain-machine interface. Proceedings of the Fifteenth Acm International Conference on Web Search and Data Mining, 1569–1572. https://doi.org/10.1145/3488560.3502185 [chen2022bmsi]

Griffin, D. (2022). Situating web searching in data engineering: Admissions, extensions, repairs, and ownership [PhD thesis, University of California, Berkeley]. https://danielsgriffin.com/assets/griffin2022situating.pdf [griffin2022situating]

Griffin, D., & Lurie, E. (2022). Search quality complaints and imaginary repair: Control in articulations of Google Search. New Media & Society, 0(0), 14614448221136505. https://doi.org/10.1177/14614448221136505 [griffin2022search]