qChecker - a script to check for quotes in a source document

github.com/danielsgriffin/qChecker

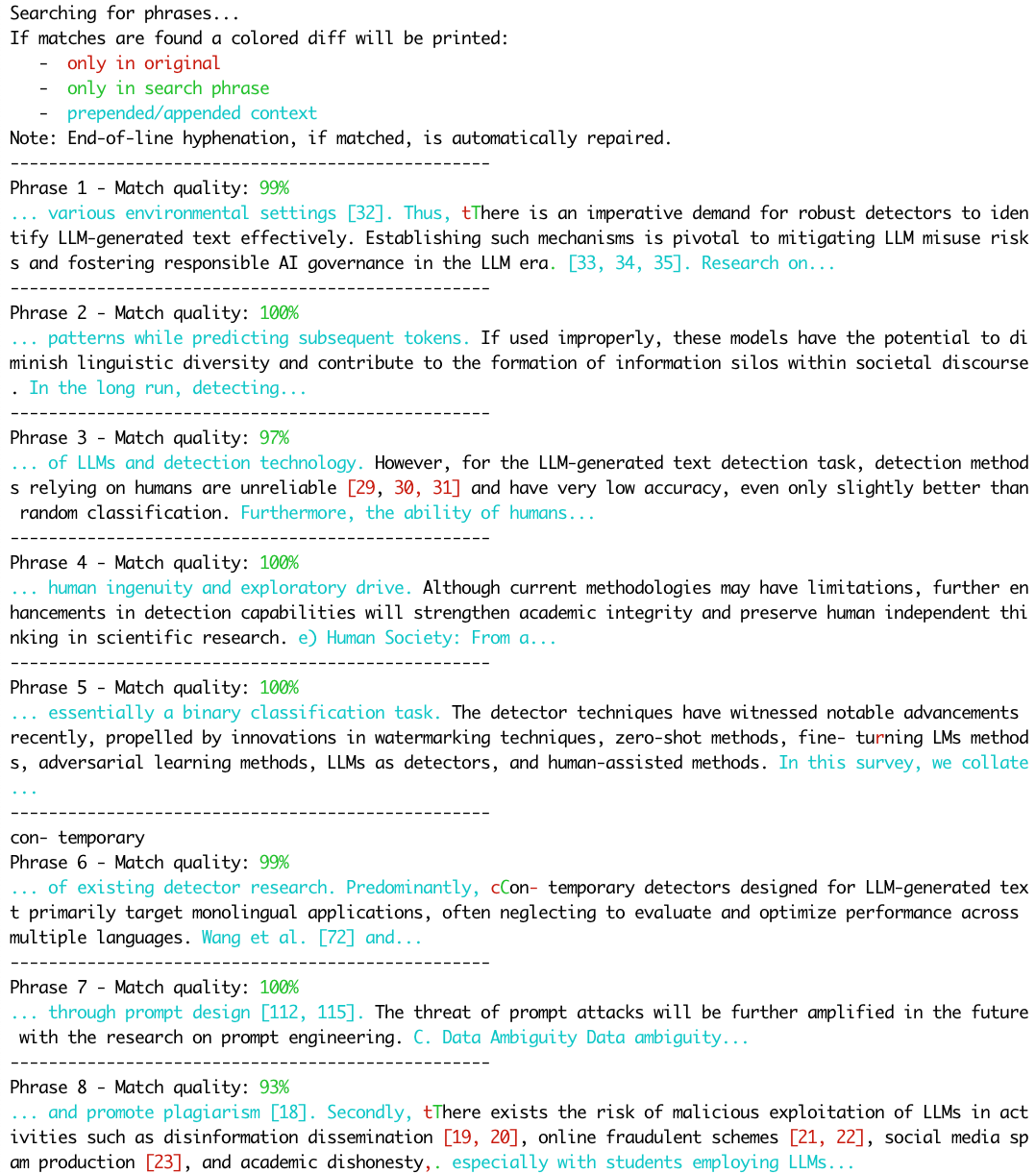

I wrote a utility script to quickly check if quotations exist in a source document. It was written to spot-check Anthropic’s Claude in regards to general comments from Simon Willison today about asking for quotations from PDFs. There was also an exchange between Willison and Mike Young about Claude inventing quotes. These sorts of “hallucinations” are common, though there are some indications that Claude may not invent quotations, at least, as much as other LLMs.

Obviously for many quotations and source documents it is possible to conduct thorough searches. You can copy a quote and try to search with your favorite PDF reader. This can be complicated by how the OCR was performed and how the PDF reader handles line-ending hyphenations and strings that span columns or more than one page. It can be frustrated by using exact quotes to try to avoid the pollution from multiple non-exact matches. It can be both time-consuming and prone to error. With this script you have some assurances that incorrect quotations might jump out (or at least gain some ability to interrogate the quotation-source pairing). Note that incomplete or decontextualized quotations are not as easily addressable.

I am not proposing this as a solution. There are many other approaches and probably better tools. I am using the chance to write this script to thing through the work of validating such responses.

Usage

You can input a filename for a local PDF and a list of quotations and it will return a match quality score and a colored diff for the best match against the claimed quotation.

Limitations

- There are currently no tests written for the functions.

- It successfully finds quotes that span columns and pages (at least in my single PDF test) but does not find quotes that are interrupted by a page dedicated entirely to a table.

Example

- input PDF: https://arxiv.org/pdf/2310.14724.pdf

-

prompt:

Given the paper’s content, could you extract and provide direct quotations that represent the key findings or pivotal points of the arguments presented?

-

response:

Here are some key quotes extracted from the paper that represent pivotal points:

- “There is an imperative demand for robust detectors to identify LLM-generated text effectively. Establishing such mechanisms is pivotal to mitigating LLM misuse risks and fostering responsible AI governance in the LLM era.”

- “If used improperly, these models have the potential to diminish linguistic diversity and contribute to the formation of information silos within societal discourse.”

- “However, for the LLM-generated text detection task, detection methods relying on humans are unreliable, and have very low accuracy, even only slightly better than random classification.”

- “Although current methodologies may have limitations, further enhancements in detection capabilities will strengthen academic integrity and preserve human independent thinking in scientific research.”

- “The detector techniques have witnessed notable advancements recently, propelled by innovations in watermarking techniques, zero-shot methods, fine-tuning LMs methods, adversarial learning methods, LLMs as detectors, and human-assisted methods.”

- “Contemporary detectors designed for LLM-generated text primarily target monolingual applications, often neglecting to evaluate and optimize performance across multiple languages.”

- “The threat of prompt attacks will be further amplified in the future with the research on prompt engineering.”

- “There exists the risk of malicious exploitation of LLMs in activities such as disinformation dissemination, online fraudulent schemes, social media spam production, and academic dishonesty.”

In this example case Claude skipped the in-text quotations and also corrected an alternative spelling in the example (preprint) PDF (changing fine-turning to fine-tuning).

See also the Double-check-response tag.

Added January 26, 2024

Related:

Hmmm, @YouSearchEngine strips out the citations when you click copy. Compare @perplexity_ai, which provides the footnotes and links at bottom, and @phindsearch (shown) which in-lines the citations as markdown links includes a copy icon, and has alt. options in the share popup.